Hi! I’m a PhD student in the Embodied Vision group, supervised by Jörg Stückler, and a member of the IMPRS-IS graduate school. Currently, I am particularly interested in sequential decision making with model-based planning and model-based reinforcement learning. I work on equipping intelligent agents with the capability to adapt to changes in the real world and the ability to solve problems which require long-term planning and fast reactive control. Featured below are selected projects. Please see the Publications tab for a complete list of publications.

Physics-based models

|

Jan Achterhold, Philip Tobuschat, Hao Ma, Dieter Buechler, Michael Muehlebach, and Joerg Stueckler

Black-Box vs. Gray-Box: A Case Study on Learning Table Tennis Ball Trajectory Prediction with Spin and Impacts

L4DC 2023 - Paper at PMLR, Code on GitHub

In this paper, we present a novel physics-based (gray-box) approach for tracking and predicting the motion of a table tennis ball based on the extended Kalman filter. We also learn a relation of the ball launch process on the ball's spin, increasing predictive accuracy. Our method outperforms non-physics-based (black-box) method in predictive accuracy. A robot arm successfully returns the ball in 29/30 trials.

|

Adaptive forward models

|

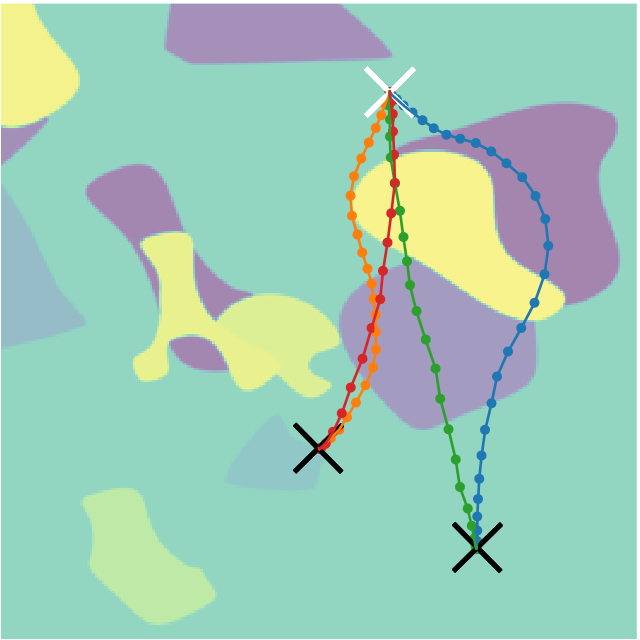

Suresh Guttikonda, Jan Achterhold, Haolong Li, Joschka Boedecker and Joerg Stueckler

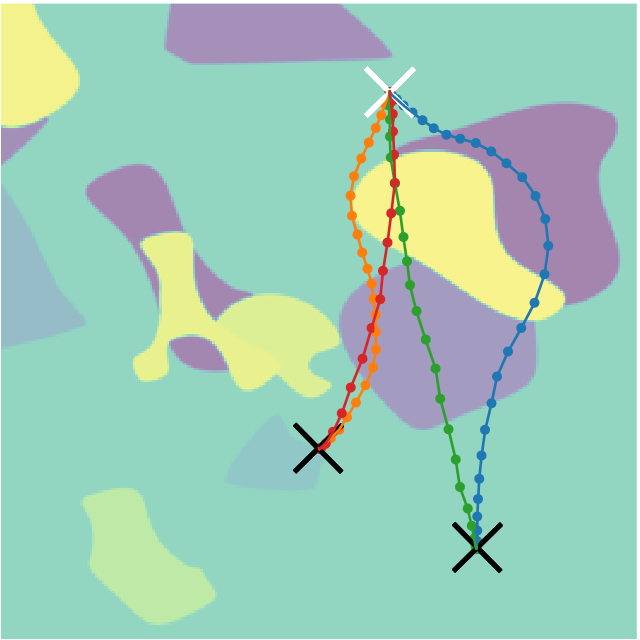

Context-Conditional Navigation with a Learning-Based Terrain- and Robot-Aware Dynamics Model

ECMR 2023 (oral) - ArXiv preprint

In robot navigation, the robot has to deal with a multitude of variations: In addition to terrain properties, which vary spatially, the dynamics of the robot can vary, e.g. due to wear and tear and different payloads. In this paper, we propose a model-based method termed TRADYN to cope with both kinds of variations for terrain- and robot aware robot navigation.

|

|

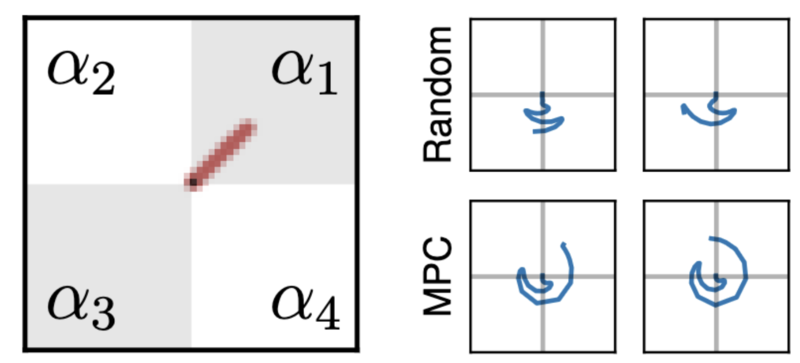

Jan Achterhold and Joerg Stueckler

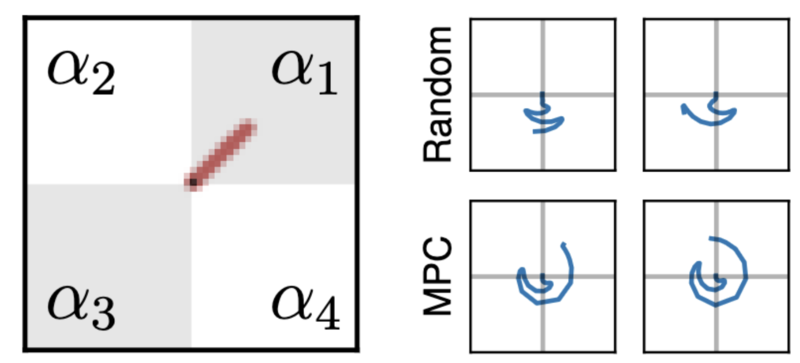

Explore the Context: Optimal Data Collection for Context-Conditional Dynamics Models

AISTATS 2021 - project page (paper, video, poster, code)

In case an environment is subject to variations, these variations need to be properly identified in order to obtain an accurate forward model. This paper proposes an active learning scheme based on the Expected Information Gain to compute actions to quickly identify a particular environment among variations. We successfully solve planning tasks with the calibrated models.

|

|

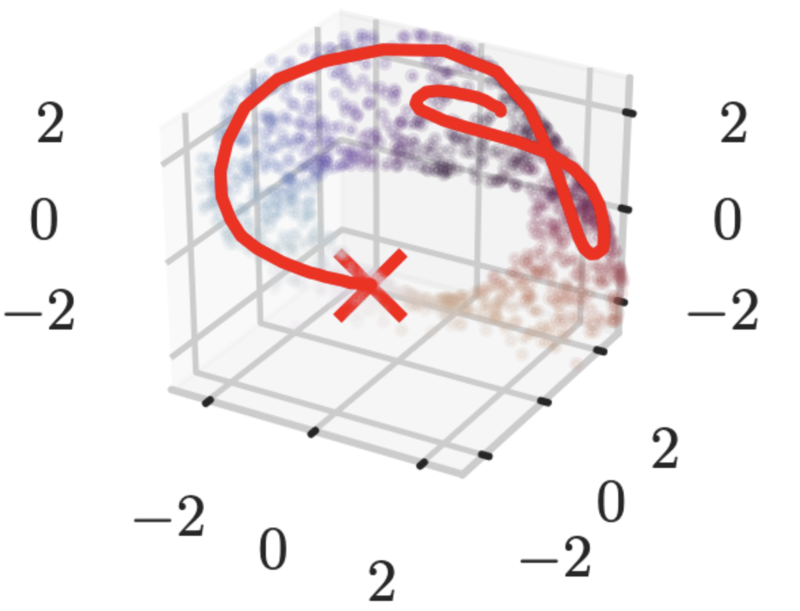

Nathanael Bosch, Jan Achterhold, Laura Leal-Taixé, and Joerg Stueckler

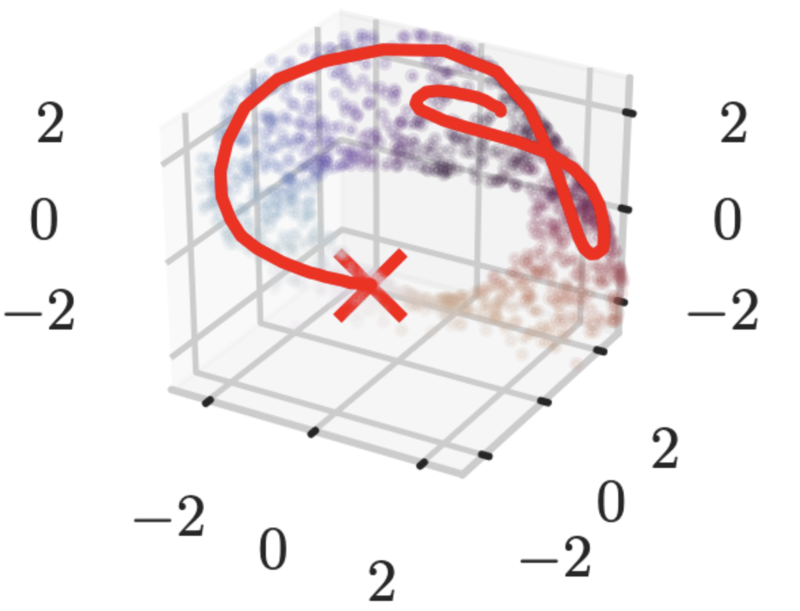

Planning from Images with Deep Latent Gaussian Process Dynamics

L4DC 2020 - project page (paper, poster, code)

In this project, we have developed an intelligent agent which learns a forward dynamics model in the latent space of a variational autoencoder using Gaussian processes. This allows to quickly adapt to changes in the environment dynamics without costly re-training, and to perform planning with the adapted forward model.

|

Long-term planning and reactive control

|

Jan Achterhold, Markus Krimmel, and Joerg Stueckler

Learning Temporally Extended Skills in Continuous Domains as Symbolic Actions for Planning

CoRL 2022 (oral) - project page (paper, poster, video, code)

Long-term planning and reactive control are typically conflicting objectives in model-based planning. Here, we present a hierarchical agent which autonomously learns temporally extended skills and an associated forward model. The model captures the (temporally extended) effect of executing a skill and thus allows for long-term planning. The planning result is a sequence of skills which can reactively be executed using the learned skill policies.

|