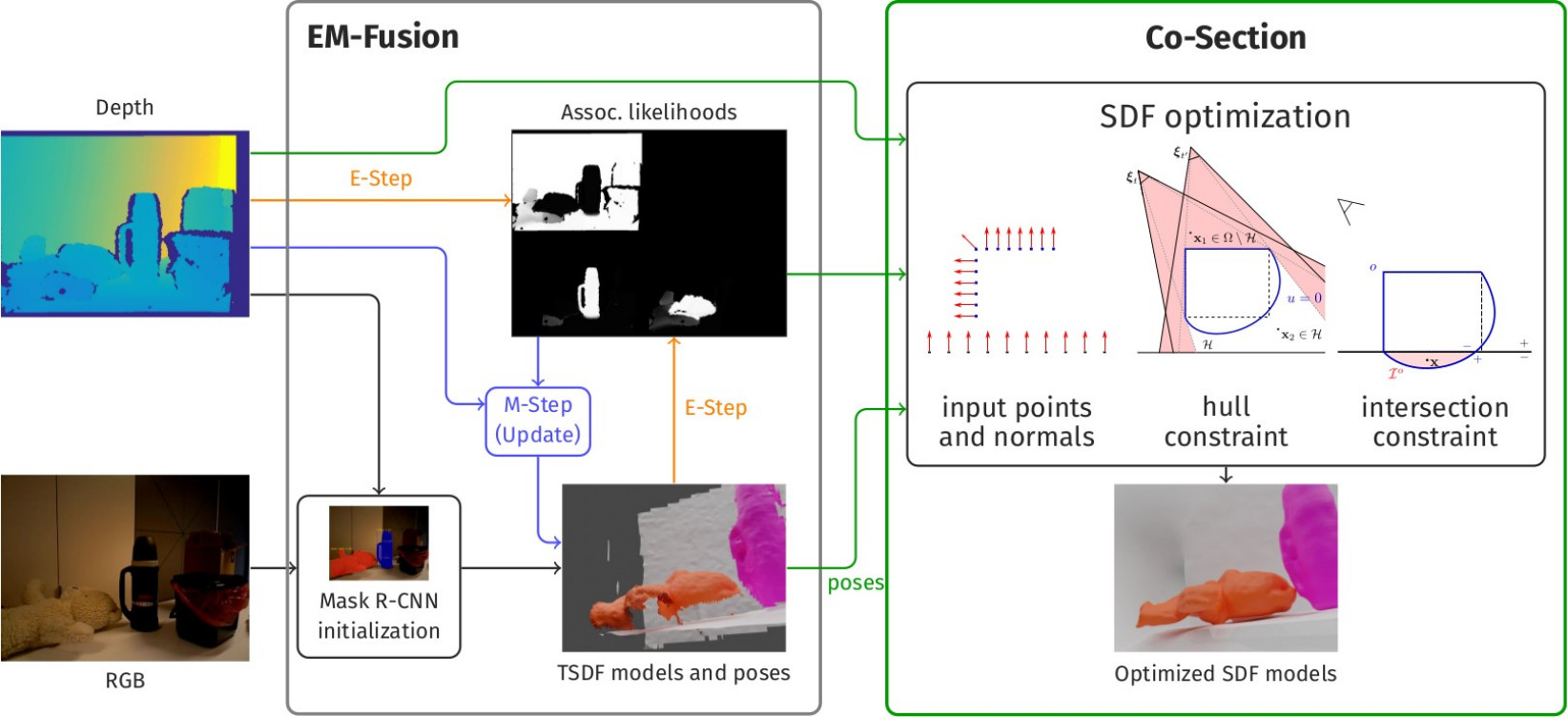

Simultaneous localization and 3D mapping of dynamic objects with EM-Fusion [ ], and shape completion using visual hull and object intersection constraints in Co-Section [ ].

When agents interact with their environment, or act in dynamic scenes, they require the ability to observe moving objects. Early approaches to SLAM treat dynamics as outliers and are mostly concerned with mapping and localizing with respect to the static environment. We pursue methods that can detect moving parts of the scene, and track and reconstruct these as separate objects.

In EM-Fusion [ ] we propose a tracking and mapping approach which segments objects based on semantic instance segmentation and motion cues. In an expectation-maximization framework, the approach incrementally estimates 3D maps of the segmented objects represented in signed distance fields (SDF), and tracks the motion of the objects by aligning the depth measurements on the objects with the SDF maps. The EM approach provides probabilistic soft associations of image pixels to objects which can make mapping and tracking more robust and accurate than hard decisions.

EM-Fusion only maps the visible parts of the objects. In Co-Section [ ] we proposed a method for completing the shapes with visible hull and object intersection constraints in a physically plausible way. The approach is based on a variational method which optimizes the SDF maps from data, plausibility and regularization terms. For instance, we demonstrate that the method allows for closing the contour of an object towards the support surface when the object is placed on a table.

For high rate and high dynamic range tracking, event-based cameras which measure logarithmic intensity changes at each pixel asynchronously are promising devices. In [ ] we present a 3D object tracking method which can estimate the 6-DoF motion of objects on short time intervals. It combines tracking using events on one layer with photometric image frame alignment on a second optimization layer. In experiments with synthetic data, we demonstrate that the combination of both event- and frame-based alignment can outperform pure event- or frame-based tracking for fast moving objects. In future work we aim at scaling the method to longer sequences with real data.