2024

Kandukuri, R. K., Strecke, M., Stueckler, J.

Physics-Based Rigid Body Object Tracking and Friction Filtering From RGB-D Videos

In Proceedings of the International Conference on 3D Vision (3DV), 2024 (inproceedings)

Strecke, M., Stueckler, J.

Physically Plausible Object Pose Refinement in Cluttered Scenes

In Proceedings of the German Conference on Pattern Recognition (GCPR), 2024, to appear (inproceedings) To be published

Kirchdorfer, L., Elich, C., Kutsche, S., Stuckenschmidt, H., Schott, L., Köhler, J. M.

Analytical Uncertainty-Based Loss Weighting in Multi-Task Learning

In Proceedings of the German Conference on Pattern Recognition (GCPR), 2024, to appear (inproceedings) To be published

Elich, C., Kirchdorfer, L., Köhler, J. M., Schott, L.

Examining Common Paradigms in Multi-Task Learning

In Proceedings of the German Conference on Pattern Recognition (GCPR), 2024, to appear (inproceedings) To be published

Li, H., Stueckler, J.

Online Calibration of a Single-Track Ground Vehicle Dynamics Model by Tight Fusion with Visual-Inertial Odometry

In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2024 (inproceedings)

2023

Achterhold, J., Tobuschat, P., Ma, H., Büchler, D., Muehlebach, M., Stueckler, J.

Black-Box vs. Gray-Box: A Case Study on Learning Table Tennis Ball Trajectory Prediction with Spin and Impacts

In Proceedings of the 5th Annual Learning for Dynamics and Control Conference (L4DC), 211, pages: 878-890, Proceedings of Machine Learning Research, (Editors: Nikolai Matni, Manfred Morari and George J. Pappa), PMLR, June 2023 (inproceedings)

Dhédin, V., Li, H., Khorshidi, S., Mack, L., Ravi, A. K. C., Meduri, A., Shah, P., Grimminger, F., Righetti, L., Khadiv, M., Stueckler, J.

Visual-Inertial and Leg Odometry Fusion for Dynamic Locomotion

In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2023 (inproceedings)

Elich, C., Armeni, I., Oswald, M. R., Pollefeys, M., Stueckler, J.

Learning-based Relational Object Matching Across Views

In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2023 (inproceedings)

Guttikonda, S., Achterhold, J., Li, H., Boedecker, J., Stueckler, J.

Context-Conditional Navigation with a Learning-Based Terrain- and Robot-Aware Dynamics Model

In Proceedings of the European Conference on Mobile Robots (ECMR), 2023 (inproceedings)

2022

Xue, Y., Li, H., Leutenegger, S., Stueckler, J.

Event-based Non-Rigid Reconstruction from Contours

In Proceedings of the British Machine Vision Conference (BMVC), 2022 (inproceedings)

Achterhold, J., Krimmel, M., Stueckler, J.

Learning Temporally Extended Skills in Continuous Domains as Symbolic Actions for Planning

In Proceedings of The 6th Conference on Robot Learning , 205, pages: 225-236 , Proceedings of Machine Learning Research , 6th Annual Conference on Robot Learning (CoRL 2022) , 2022 (inproceedings)

2021

Strecke, M., Stückler, J.

DiffSDFSim: Differentiable Rigid-Body Dynamics With Implicit Shapes

In 2021 International Conference on 3D Vision (3DV 2021) , pages: 96-105 , International Conference on 3D Vision (3DV 2021) , December 2021 (inproceedings)

Achterhold, J., Stueckler, J.

Explore the Context: Optimal Data Collection for Context-Conditional Dynamics Models

In Proceedings of The 24th International Conference on Artificial Intelligence and Statistics (AISTATS 2021) , 130, JMLR, Cambridge, MA, Titel The 24th International Conference on Artificial Intelligence and Statistics (AISTATS 2021) , April 2021, preprint CoRR abs/2102.11394 (inproceedings)

Li, H., Stueckler, J.

Tracking 6-DoF Object Motion from Events and Frames

In Proc. of IEEE Int. Conf. on Robotics and Automation (ICRA), 2021 (inproceedings)

2020

Strecke, M., Stückler, J.

Where Does It End? - Reasoning About Hidden Surfaces by Object Intersection Constraints

In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), pages: 9589 - 9597, IEEE, Piscataway, NJ, IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR 2020), June 2020, preprint Corr abs/2004.04630 (inproceedings)

Wang, R., Yang, N., Stückler, J., Cremers, D.

DirectShape: Photometric Alignment of Shape Priors for Visual Vehicle Pose and Shape Estimation

In Proceedings of the IEEE international Conference on Robotics and Automation (ICRA), pages: 11067 - 11073, IEEE, Piscataway, NJ, IEEE International Conference on Robotics and Automation (ICRA 2020), May 2020, arXiv:1904.10097 (inproceedings)

Kandukuri, R., Achterhold, J., Moeller, M., Stueckler, J.

Learning to Identify Physical Parameters from Video Using Differentiable Physics

Proc. of the 42th German Conference on Pattern Recognition (GCPR), 2020, GCPR 2020 Honorable Mention, preprint https://arxiv.org/abs/2009.08292 (conference)

Bosch, N., Achterhold, J., Leal-Taixe, L., Stückler, J.

Planning from Images with Deep Latent Gaussian Process Dynamics

Proceedings of the 2nd Conference on Learning for Dynamics and Control (L4DC), 120, pages: 640-650, Proceedings of Machine Learning Research (PMLR), (Editors: Alexandre M. Bayen and Ali Jadbabaie and George Pappas and Pablo A. Parrilo and Benjamin Recht and Claire Tomlin and Melanie Zeilinger), 2020, preprint arXiv:2005.03770 (conference)

Pinneri, C., Sawant, S., Blaes, S., Achterhold, J., Stueckler, J., Rolinek, M., Martius, G.

Sample-efficient Cross-Entropy Method for Real-time Planning

In Conference on Robot Learning 2020, 2020 (inproceedings)

Mallick, A., Stückler, J., Lensch, H.

Learning to Adapt Multi-View Stereo by Self-Supervision

In Proceedings of the British Machine Vision Conference (BMVC), 2020, preprint https://arxiv.org/abs/2009.13278 (inproceedings)

2019

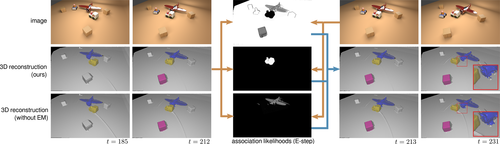

Strecke, M., Stückler, J.

EM-Fusion: Dynamic Object-Level SLAM With Probabilistic Data Association

In Proceedings IEEE/CVF International Conference on Computer Vision 2019 (ICCV), pages: 5864-5873, IEEE, 2019 IEEE/CVF International Conference on Computer Vision (ICCV), October 2019 (inproceedings)

Zhu, D., Munderloh, M., Rosenhahn, B., Stückler, J.

Learning to Disentangle Latent Physical Factors for Video Prediction

In Pattern Recognition - Proceedings German Conference on Pattern Recognition (GCPR), Springer International, German Conference on Pattern Recognition (GCPR), September 2019 (inproceedings)

Elich, C., Engelmann, F., Kontogianni, T., Leibe, B.

3D Birds-Eye-View Instance Segmentation

In Pattern Recognition - Proceedings 41st DAGM German Conference, DAGM GCPR 2019, pages: 48-61, Lecture Notes in Computer Science (LNCS) 11824, (Editors: Fink G.A., Frintrop S., Jiang X.), Springer, 2019 German Conference on Pattern Recognition (GCPR), September 2019, ISSN: 03029743 (inproceedings)

2018

Schubert, D., Usenko, V., Demmel, N., Stueckler, J., Cremers, D.

Direct Sparse Odometry With Rolling Shutter

In European Conference on Computer Vision (ECCV), September 2018, oral presentation (inproceedings)

Yang, N., Wang, R., Stueckler, J., Cremers, D.

Deep Virtual Stereo Odometry: Leveraging Deep Depth Prediction for Monocular Direct Sparse Odometry

In European Conference on Computer Vision (ECCV), September 2018, oral presentation, preprint https://arxiv.org/abs/1807.02570 (inproceedings)

Schubert, D., Goll, T., Demmel, N., Usenko, V., Stueckler, J., Cremers, D.

The TUM VI Benchmark for Evaluating Visual-Inertial Odometry

In IEEE International Conference on Intelligent Robots and Systems (IROS), 2018, arXiv:1804.06120 (inproceedings)

Achterhold, J., Koehler, J. M., Schmeink, A., Genewein, T.

Variational Network Quantization

In International Conference on Learning Representations , 2018 (inproceedings)

Alperovich, A., Johannsen, O., Strecke, M., Goldluecke, B.

Light field intrinsics with a deep encoder-decoder network

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018 (inproceedings)

Strecke, M., Goldluecke, B.

Sublabel-accurate convex relaxation with total generalized variation regularization

In German Conference on Pattern Recognition (Proc. GCPR), 2018 (inproceedings)

2017

von Stumberg, L., Usenko, V., Engel, J., Stueckler, J., Cremers, D.

From Monocular SLAM to Autonomous Drone Exploration

In European Conference on Mobile Robots (ECMR), September 2017 (inproceedings)

Ma, L., Stueckler, J., Kerl, C., Cremers, D.

Multi-View Deep Learning for Consistent Semantic Mapping with RGB-D Cameras

In IEEE International Conference on Intelligent Robots and Systems (IROS), Vancouver, Canada, 2017 (inproceedings)

Strecke, M., Alperovich, A., Goldluecke, B.

Accurate depth and normal maps from occlusion-aware focal stack symmetry

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017 (inproceedings)

Kuznietsov, Y., Stueckler, J., Leibe, B.

Semi-Supervised Deep Learning for Monocular Depth Map Prediction

In IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2017 (inproceedings)

Alperovich, A., Johannsen, O., Strecke, M., Goldluecke, B.

Shadow and Specularity Priors for Intrinsic Light Field Decomposition

In Energy Minimization Methods in Computer Vision and Pattern Recognition (EMMCVPR), 2017 (inproceedings)

Kasyanov, A., Engelmann, F., Stueckler, J., Leibe, B.

Keyframe-Based Visual-Inertial Online SLAM with Relocalization

In IEEE/RSJ Int. Conference on Intelligent Robots and Systems,

IROS, 2017 (inproceedings)

Engelmann, F., Stueckler, J., Leibe, B.

SAMP: Shape and Motion Priors for 4D Vehicle Reconstruction

In IEEE Winter Conference on Applications of Computer Vision,

WACV, 2017 (inproceedings)

2016

zur Jacobsmühlen, J., Achterhold, J., Kleszczynski, S., Witt, G., Merhof, D.

Robust calibration marker detection in powder bed images from laser beam melting processes

In 2016 IEEE International Conference on Industrial Technology (ICIT), pages: 910-915, March 2016 (inproceedings)

Usenko, V., Engel, J., Stueckler, J., Cremers, D.

Direct Visual-Inertial Odometry with Stereo Cameras

In IEEE International Conference on Robotics and Automation (ICRA), 2016 (inproceedings)

Ma, L., Kerl, C., Stueckler, J., Cremers, D.

CPA-SLAM: Consistent Plane-Model Alignment for Direct RGB-D SLAM

In IEEE International Conference on Robotics and Automation (ICRA), 2016 (inproceedings)

Klostermann, D., Osep, A., Stueckler, J., Leibe, B.

Unsupervised Learning of Shape-Motion Patterns for Objects in Urban Street Scenes

In British Machine Vision Conference (BMVC), 2016 (inproceedings)

Kochanov, D., Osep, A., Stueckler, J., Leibe, B.

Scene Flow Propagation for Semantic Mapping and Object Discovery in Dynamic Street Scenes

In IEEE/RSJ Int. Conference on Intelligent Robots and Systems, IROS, 2016 (inproceedings)

Engelmann, F., Stueckler, J., Leibe, B.

Joint Object Pose Estimation and Shape Reconstruction in Urban Street Scenes Using 3D Shape Priors

In Proc. of the German Conference on Pattern Recognition (GCPR), 2016 (inproceedings)

2015

Jaimez, M., Souiai, M., Stueckler, J., Gonzalez-Jimenez, J., Cremers, D.

Motion Cooperation: Smooth Piece-Wise Rigid Scene Flow from RGB-D Images

In Proc. of the Int. Conference on 3D Vision (3DV), 2015, \url{https://www.youtube.com/watch?v=qjPsKb-_kvE} (inproceedings)

Holz, D., Topalidou-Kyniazopoulou, A., Stueckler, J., Behnke, S.

Real-Time Object Detection, Localization and Verification for Fast Robotic Depalletizing

In IEEE International Conference on Intelligent Robots and Systems (IROS), 2015 (inproceedings)

Kerl, C., Stueckler, J., Cremers, D.

Dense Continuous-Time Tracking and Mapping with Rolling Shutter RGB-D Cameras

In IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015 (inproceedings)

Engel, J., Stueckler, J., Cremers, D.

Large-Scale Direct SLAM with Stereo Cameras

In IEEE International Conference on Intelligent Robots and Systems (IROS), 2015 (inproceedings)

Maier, R., Stueckler, J., Cremers, D.

Super-Resolution Keyframe Fusion for 3D Modeling with High-Quality Textures

In International Conference on 3D Vision (3DV), 2015 (inproceedings)

Usenko, V., Engel, J., Stueckler, J., Cremers, D.

Reconstructing Street-Scenes in Real-Time From a Driving Car

In Proc. of the Int. Conference on 3D Vision (3DV), October 2015 (inproceedings)

2014

Stueckler, J., Behnke, S.

Adaptive Tool-Use Strategies for Anthropomorphic Service Robots

In Proc. of the 14th IEEE-RAS International Conference on Humanoid Robots (Humanoids), 2014 (inproceedings)

Droeschel, D., Stueckler, J., Behnke, S.

Local Multi-Resolution Surfel Grids for MAV Motion Estimation and 3D Mapping

In Proc. of the 13th International Conference on Intelligent Autonomous Systems (IAS), 2014 (inproceedings)